Experimental Music

Liquidities (2023)

Written in collaboration with Louise Devenish

For slinky-prepared Vibraphone and Electronics

For slinky-prepared Vibraphone and Electronics

Developed for a performance in March of 2023, this is the second in a series of short, co-composed works that prepare or extend a familiar percussion instrument with something playful – in this case, a toy slinky. Finding ways to use objects for their sound potential is known as an exploration of instrumentality. In this work, this began with finding an acoustic vocabulary using the slinky as a sort of ‘mallet’ on the vibraphone, which drew slippery and superficial acoustic sounds from the surface of the vibraphone bars that sound a little bit magical – this immediately conjured images of water surfaces with light bouncing off them in our minds. These sounds were then sampled and manipulated to find a complementary electronic vocabulary, woven into a fixed media track by Stuart.

The Anthropomorphic Machine (2023)

Entropic States (2021)

[Alluvial Gold Installation] (2020)

counterpoise (2020)

/ˈkaʊntəpɔɪz/

Commissioned by Decibel New Music Ensemble as part of the 2 Minute From Home project

For Flute, Bass Clarinet, Viola, Cello, Chaotic Oscillator and Circuit Bent Toy

Commissioned by Decibel New Music Ensemble as part of the 2 Minute From Home project

For Flute, Bass Clarinet, Viola, Cello, Chaotic Oscillator and Circuit Bent Toy

|

The work isn’t intended to be a direct commentary on the Coronavirus pandemic, but is not intended to be ambivalent either. This work is concerned with the counterpoise that balances life on the planet as we know it, and human civilisation from engaging in civil or world war. The Global impact of COVID-19 has made it apparent the very tenuous balance that human mortality resides, between human vulnerability and resilience. Chaotic systems exhibit this kind of volatility, and like a tensile system, there is a boundary at which the system falls into catastrophe. Human kind is quick to respond worldwide to the deterioration of these systems, but never so much before has the world has been challenged by the “age of disinformation”.

The score is an animated graphic score using the Decibel Scoreplayer, and uses the apps canvas draw mode.

The score is an animated graphic score using the Decibel Scoreplayer, and uses the apps canvas draw mode.

Percipience: After Kaul (2019)

|

Written in collaboration with Louise Devenish

For 3 Overtone Triangles and Electronics The overtone triangle is a design by Matthias Kaul. Instructions for making an overtone triangle can be found at https://www.youtube.com/watch?v=jZT0cejiVnI. In Percipience, three overtone triangles should be suspended from the ceiling (or from a tall gong rack). The largest and most resonant should be in the centre, with the remaining two triangles to the right and left of it. Mallets required include a single hard yarn mallet (e.g. a Balter green) and a pair of triangle beaters with rubber handles. The score should be read in the Decibel ScorePlayer application on an iPad, however it is also available as a hard copy paper score. The work was premiered at the Perth Institute of Contemporary Arts, 28 June 2019. |

|

Noise in the Clouds (2017)

Commissioned by Decibel New Music Ensemble as part of the Electronic Concerto concert in 2017

For Laptop Soloist, Sextet (3 acoustic duos), Fixed Media and Live Electronics (stereo), and Live Generated Visuals

Noise in the Clouds is a laptop concerto written for laptop soloist, visual projection, sextet ensemble, and live electronics. Whilst the concerto form has been understood for hundreds of years as a work for soloist and ensemble, the writing of concertos specifically for laptop soloists has only emerged within the last few years, and therefore this combination is free of the constraints of other established instrumentations. The work explores chaotic phenomena of varying types: chaotic audio oscillators, chaotic phenomena as found in nature, and the process of iteration as a visual narrative. These expressions became a mechanism or structure for generating the sound universe, musical structure, the compositional process, the visualisation, and the notated score. The laptop soloist performs an instrument developed by the composer which derives all of its sounds from chaotic 'strange attractors' that are expressively controlled by the soloist by the 3D movements of the hands. This instrument expands on a 2D multi-node timbre morphology interface developed by the composer, applying this instead to modulating the generative parameters of chaotic audio oscillators.

For Laptop Soloist, Sextet (3 acoustic duos), Fixed Media and Live Electronics (stereo), and Live Generated Visuals

Noise in the Clouds is a laptop concerto written for laptop soloist, visual projection, sextet ensemble, and live electronics. Whilst the concerto form has been understood for hundreds of years as a work for soloist and ensemble, the writing of concertos specifically for laptop soloists has only emerged within the last few years, and therefore this combination is free of the constraints of other established instrumentations. The work explores chaotic phenomena of varying types: chaotic audio oscillators, chaotic phenomena as found in nature, and the process of iteration as a visual narrative. These expressions became a mechanism or structure for generating the sound universe, musical structure, the compositional process, the visualisation, and the notated score. The laptop soloist performs an instrument developed by the composer which derives all of its sounds from chaotic 'strange attractors' that are expressively controlled by the soloist by the 3D movements of the hands. This instrument expands on a 2D multi-node timbre morphology interface developed by the composer, applying this instead to modulating the generative parameters of chaotic audio oscillators.

Particle III (2016-7)

|

For Solo Laptop and 16.1 channel sound system

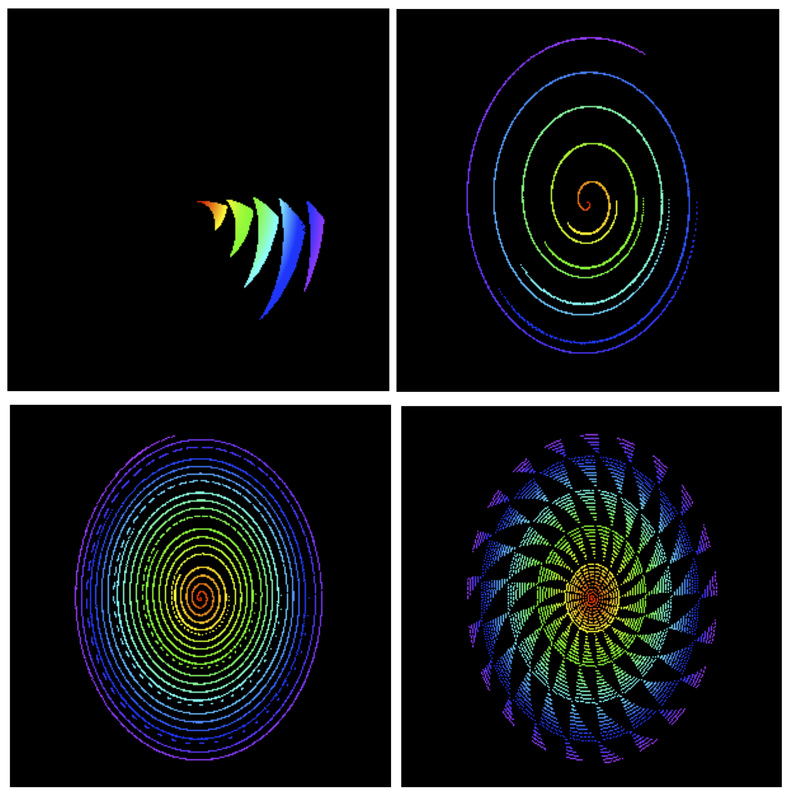

This work explores examples of spatial motion as described by Trevor Wishart, such as harmonic motion, en masse. Certain psychoacoustic effects are explored through the medium of space, such as an effect I describe as 'spatial beating', due to the spatial movements being modulated at frequencies below or within the lower audible frequency range (0-40Hz). These LFO-like effects are experienced as a spatial shimmering or pulsation of the sound source. This work largely explores spectral spatialisation, and involves the spatial positioning of spectral bands at the micro-level (audio-rate). The images show instantaneous spatial positions of different spectral bands colour coded according to their frequency. The work also explores temporally disconnected spatial displacements of frequency bins, and other bizarre and strange effects resulting through numerical modifications and morphologies/distortions of the coordinates of frequency in space. All of the processes explored in this work involve sound shapes where all spectral bins have independently computed trajectory curves. |

Intervolver (2016)

Commissioned by the Western Australian Laptop Orchestra (WALO)

9 laptops

8.1 surround sound system (8 separate loudspeakers arranged equidistantly in a circle, with sub-woofer)

9 laptops

8.1 surround sound system (8 separate loudspeakers arranged equidistantly in a circle, with sub-woofer)

|

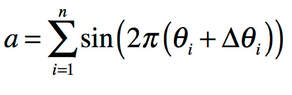

This work began by modifying some existing Java code I created for an iterative phase shifting additive synthesizer. The original design of this additive synthesizer was based on a simple algorithm (a continuous differential equation as shown below) that computes the individual changes in phase for a bank of oscillators, iterating this process through a vector block of samples, and finally accumulating the sinusoids generated, for i number of oscillators:

This code was further modified so that there were three different timed structures, one an iterative process involving computation of the phase shift of 350 independent oscillators. Secondly the phase shift applied to these oscillators is modulated at audio-rate in a 1024 sample repeating cycle that determines the bank of frequencies by a lookup table. These two isochronic structures align differently meaning that the phase shifts are modulated every audio sample by rotating this 1024 sample vector by 26 values each time this cycle repeats. Furthermore, the values of the 1024 samples determining the amount of phase shift are influenced by a slower evolving system which cyclically changes the phase shift of 20 of the 350 oscillators each second. For this entire system to cycle through the entire bank of oscillators, it takes 51.2 seconds. Each laptop performer has a copy of the same instrument, and follows a graphic score inside the Decibel scoreplayer.

| |||

Rumblestrip (2016)

A site specific work commissioned by the City of Perth

Solo Laptop, 2 iPods, and 14.2 channel sound system

Solo Laptop, 2 iPods, and 14.2 channel sound system

Rumblestrip (a site-specific multi-work ‘simultaneity’ in collaboration with visual artists Erin Coates, Neil Aldum, Simone Johnston, Loren Holmes)

- Coates, E., Aldum, N., Johnston, S., Holmes, L. & James, S. (2016). Rumblestrip.

- Coates, E., Aldum, N., Johnston, S. & James, S. (2016). Eternal Boglap.

- Aldum, N. & James, S. (2016). Stephenson-Hepburn Memo (Trolley).

- Johnston, S. & James, S. (2016). TITLE (Breath).

- Aldum, N. & James, S. (2016). Clustertruck.

- Coates, E., Aldum, N., Johnston, S & James, S. (2016). Slushbox (Tunnel).

- Coates, E., Aldum, N., Johnston, S & James, S. (2016). Totem (Tower).

Feather (2015)

A collaboration with Australian composer Cat Hope

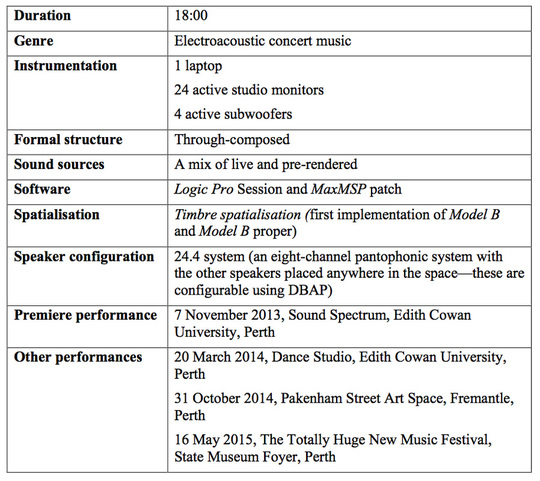

The Overview Effect (2013)

For Solo Laptop and 24.4 channel sound system

Additive Recurrence (2013)

Veden Ja Tullen Elementit (2012)

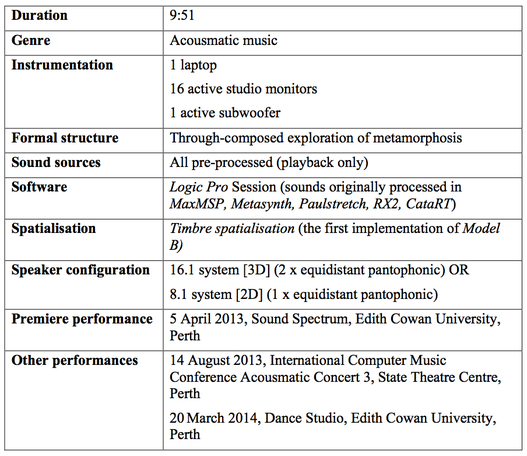

For Solo Laptop and 8.1 or 16.1 channel sound system

While in residency at the SpADE facility at DMARC, University of Limerick, my focus shifted towards applying timbre spatialisation from found sounds to environmental noises rich in harmonic content. These proved to be effective when diffused across a multichannel system. The exploration of different trajectories had led to me discovering the immersive effects of high-frequency spatial texture by using a range of asynchronous and noisy trajectories for timbre spatialisation. The immersive effects reminded me of natural environments and ecologies where the source of sounds appear to be coming from many different directions around the listener, with no clear localised point of directivity.

This experience in Ireland led to Veden Ja Tulen Elementit (2012) (the elements of water and fire), an acousmatic work that explores several different approaches to spatialisation practice. The composition began largely as an experiment in sound design, in virtual immersive environments and spaces. It was inspired by the listening modes discussed by Pierre Schaeffer (1977), Michel Chion (1983), Barry Truax, Katherine Norman (1996), David Huron (2002), and Smalley (2007). This piece, like other acousmatic music, encourages what Schaeffer (1977) describes as the reduced listening mode, exploring various kinds of immersive sound shapes generated through timbre spatialisation.

The work was presented at the 2013 ICMC.

Two environmental source sounds were used: a rippling river and burning embers. The premise of the work was to begin with the sound of the babbling brook, spatialising this immersively as to encourage a referential listening mode. With an interest in realism and hyper-realism, the opening is intentionally aimed at creating a real sense of place. However, the piece is not intended to be a soundscape, and as I have an interest in the surreal, very quickly this familiar environment begins to distort into the unfamiliar. As a composer, I cannot say I was interested so much in what these sounds represented for the listener, but in taking the listener from the familiar, and gradually and increasingly towards an unfamiliar sound world. The opening sound sample is processed in accumulating and more abstracted ways, gradually shifting this sound away from its original associated references. The piece concludes when increasingly processed water samples finally undergo a metamorphosis into the samples of the burning embers.

This work related to a concept of the phantasmagorical written about by Curtis Roads (1996) with respect to physical modelling synthesis. Some physical models can extend real-world possibilities to dimensions of the absurd: for example, a musical instrument that involves plucking the cable wires of the Golden Gate Bridge in San Francisco. In the real world these are reaching impractical possibilities; however in the algorithmic world we are not bound by these constraints. Similarly in sound spatialisation, we have spatial movements that would be impossible in the natural world, and rather than avoid them, we can explore these as further possibilities of expression above and beyond what is possible given what is possible in the physical world.

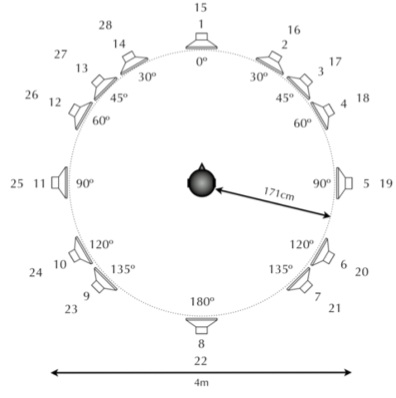

The work was conceived on the SpADE speaker configuration shown below, so was written with the elevation of sounds in mind. As this was implemented before 3D timbre spatialisation was possible, the work focuses on the use of two different circular systems: a lower and upper array of speakers.

This experience in Ireland led to Veden Ja Tulen Elementit (2012) (the elements of water and fire), an acousmatic work that explores several different approaches to spatialisation practice. The composition began largely as an experiment in sound design, in virtual immersive environments and spaces. It was inspired by the listening modes discussed by Pierre Schaeffer (1977), Michel Chion (1983), Barry Truax, Katherine Norman (1996), David Huron (2002), and Smalley (2007). This piece, like other acousmatic music, encourages what Schaeffer (1977) describes as the reduced listening mode, exploring various kinds of immersive sound shapes generated through timbre spatialisation.

The work was presented at the 2013 ICMC.

Two environmental source sounds were used: a rippling river and burning embers. The premise of the work was to begin with the sound of the babbling brook, spatialising this immersively as to encourage a referential listening mode. With an interest in realism and hyper-realism, the opening is intentionally aimed at creating a real sense of place. However, the piece is not intended to be a soundscape, and as I have an interest in the surreal, very quickly this familiar environment begins to distort into the unfamiliar. As a composer, I cannot say I was interested so much in what these sounds represented for the listener, but in taking the listener from the familiar, and gradually and increasingly towards an unfamiliar sound world. The opening sound sample is processed in accumulating and more abstracted ways, gradually shifting this sound away from its original associated references. The piece concludes when increasingly processed water samples finally undergo a metamorphosis into the samples of the burning embers.

This work related to a concept of the phantasmagorical written about by Curtis Roads (1996) with respect to physical modelling synthesis. Some physical models can extend real-world possibilities to dimensions of the absurd: for example, a musical instrument that involves plucking the cable wires of the Golden Gate Bridge in San Francisco. In the real world these are reaching impractical possibilities; however in the algorithmic world we are not bound by these constraints. Similarly in sound spatialisation, we have spatial movements that would be impossible in the natural world, and rather than avoid them, we can explore these as further possibilities of expression above and beyond what is possible given what is possible in the physical world.

The work was conceived on the SpADE speaker configuration shown below, so was written with the elevation of sounds in mind. As this was implemented before 3D timbre spatialisation was possible, the work focuses on the use of two different circular systems: a lower and upper array of speakers.

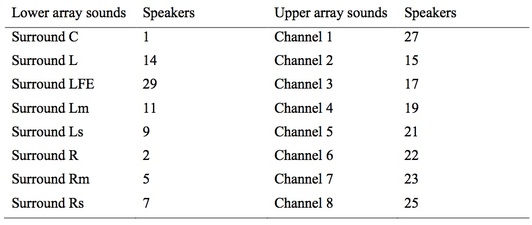

The rendered work consists of a series of audio stems, shown in the following table, are mapped to specific designated speaker channels at SpADE.

The first stage of this composition, given the limited source material, was to build a corpus of sounds through various signal processes in the frequency domain and time domain. The manipulation and distortion of the source sounds were achieved using a range of tools including MaxMSP 6, Metasynth 5, PaulStretch, and Izotope RX.2. Once working with these tools, the compositional intent quickly shifted from simply manipulating these sounds to processes that allowed for the metamorphosis and transformation of them. The CataRT software is a real-time implementation of corpus-based concatenative synthesis and was one way of deconstructing the source sounds into segments, and finally reconstructing them based on their spectral similarities. This became one of the most widely used tools for exploring this morphology of the original found sounds.

Once this corpus of sound had been derived, it was possible to view the scope of this sound world. Considering the corpus as a collective, it was necessary to decide on a structural arrangement of these different sounds. The audio files were imported into Logic Pro and arranged on the timeline allowing for immediate audition and evaluation of the structure. These audio layers were subsequently mixed with some level of automation.

In the almost 18 months between the writing of this piece and the explorations made in Particle 1, significant software development and investigation into performative approaches of timbre spatialisation was undertaken using Model A. This investigation involved the exploration of various kinds of terrain and trajectory combinations, and later explorations tended to favour the use of circular trajectories, rather than the linear trajectory used in Particle 1, allowing for a more perceptible correlation between the Wave Terrain Synthesis and timbre spatialisation processes. Nevertheless there remained some inherent flaws. One of these was that the highest frequency bins of one spectral frame naturally would spill into the lowest frequency bins of the next, creating an effect very reminiscent of the Shepard tones: an infinitely ascending or descending spectrum. Although this can be a desirable effect, it is not indicative of the features presented in the terrain or trajectory structures, and therefore does not promote the concept of a tight and intuitive semantic relationship between this control structure and the sound shape generated. The question ultimately boiled down to aesthetic interpretations, and whether the terrain could be a visual indicator for timbre spread across space and affect the spectral qualities generated across space. Early approaches to Model B take into consideration that local colours of the terrain surface affect the audible colour spectrum of their nearest speaker.

Although the earlier pieces utilise the mapping strategy in Model A, it was a visit to the ICMC in Slovenia in 2012, and preparation for a lecture I gave at the Naples Conservatory of Music that ultimately led me to pursue the histogram method. Just short of these new developments, I had the opportunity to test the software at the SpADE facility at DMARC. Here it was possible to examine the effectiveness of timbre spatialisation using the WTS control strategy over a 16- and 32-channel loudspeaker configuration. It was with this setup that the detail of spatialisation and immersiveness became apparent. Up until this time, most of the source sounds I used for testing purposes were restricted to static oscillators and noise generators. This was because I was interested in establishing various archetypes of sound shapes possible, as well as exploring the morphology of these shapes by manipulating the terrain and trajectory structures. Navigating between various immersive states could be controlled easily and effectively as I began to explore interpolating between different trajectory states as a means of morphing the sound shapes generated.

Although the sounds spatialised in the lab were invariably mono sources, the results were evolving, engaging and immersive environments. These experiments involved varying degrees of random distribution of spectra across SpADE’s 32-channel speaker configuration while experimenting with natural found sounds I had recorded. Timbre spatialisation appears to be extremely effective in a 16-channel environment for creating immersion, despite being an artificial and abstract recomposition of an auditory scene. Normandeau (2009) describes this phenomenon as “specific to the acousmatic medium is its virtuality: the sound and the projection source are not linked” (p. 278).

Normandeau (2009) also states that timbre spatialisation recombines the entire spectrum of a sound virtually in the space of the concert hall, and is therefore not a conception of space that is added at the end of the composition process, an approach frequently seen, but a truly composed spatialisation. Although the structure and basic mix for Veden Ja Tulen Elementit were in progress, I was considering the kinds of spatial treatments I would apply to specific layers of the piece. As I had already heard some of these sound shapes generated at DMARC, it was not so difficult to imagine these effects when applied to the sounds generated.

The piece explores these immersive spatial attributes—envelopment, engulfment, presence and spatial clarity using a variety of movements from slow circular correlated motion, sound slowly unfolding through space and slow fractal dispersion to fast chaotic movement and the random dispersion of sound. I found fusing standard point source approaches worked well against the more complex, evolving and immersive sound shapes generated through timbre spatialisation. This allowed the spatialisation to have momentary points of focus, influenced by the multi-levelled space forms and listener zones discussed by Smalley (2007). In this way the timbre spatialisation generates a circumspectral and immersive sound scene, from which other sound shapes and point source sounds emerge within. Complex sound shapes were also created using fractal terrains and trajectory curves, creating some very abstract and unpredictable sound shapes resulting in low- and high-frequency spatial texture. This asynchronous quality of trajectory curves produced irregular evolving and fluttering movements in the resultant sound shape. The Rössler strange attractor, a continuous differential equation, was particularly effective in exhibiting these qualities.

It was necessary to categorically list and separate these different audio files in order to decide on the spatial treatment appropriate for each sound. These were grouped into five distinct treatments. This table lists coherent sound shapes down to the most inherent and noisy of sound shapes respectively. For example, the original stream sample is spatialised using an incoherent noisy distribution, giving the sound an immersive and non-directional quality; however the descending water samples towards the end of the piece are treated with the first category, slow rotation of linear ramp function with a circular synchronised trajectory. This particular combination was effective in generating the slow rotational effects applied.

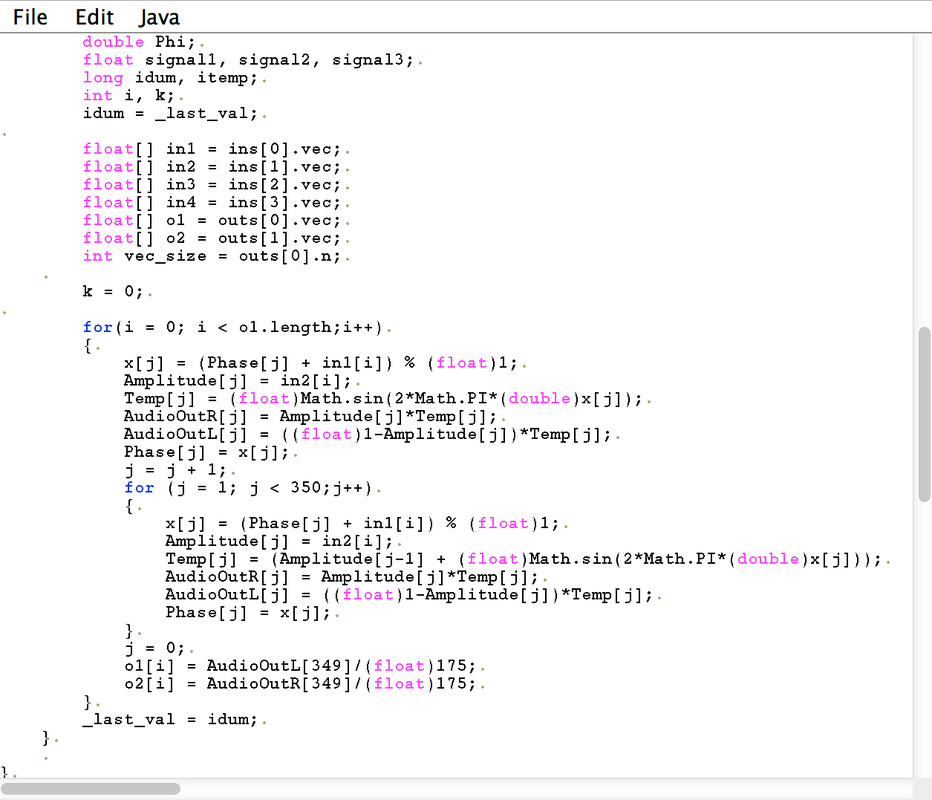

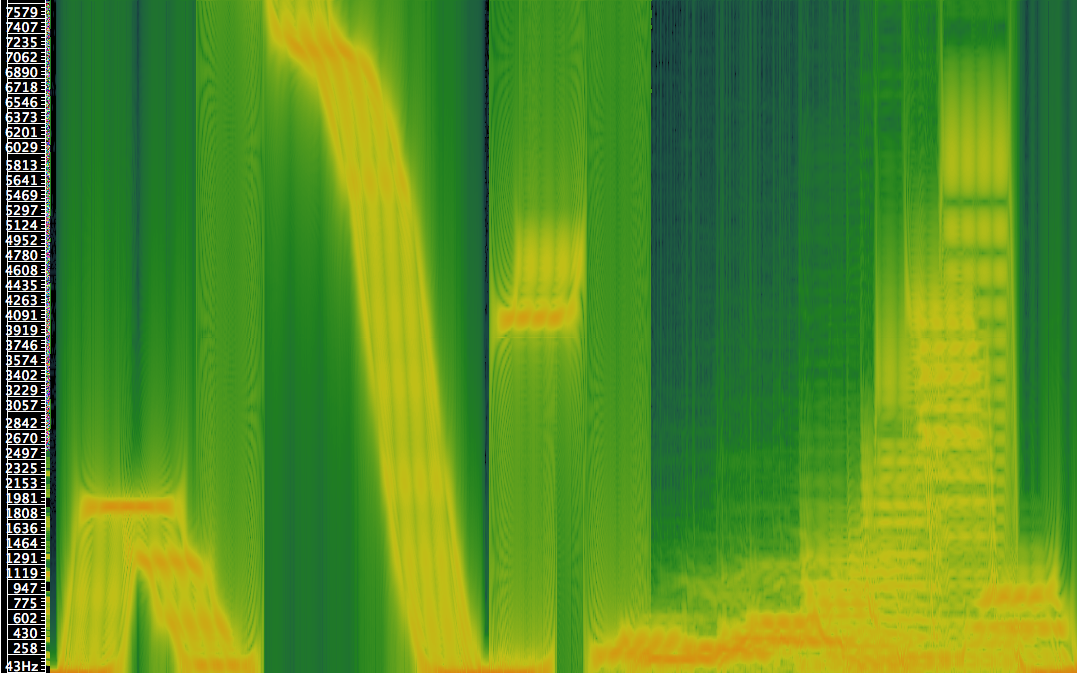

Multichannel spectral analysis performed by the Flux Pure Analyzer Essential application (with the multichannel option) show a graphical representation of this spiral- like formation generated after applying the timbre spatialisation.

The final stage of this work required a systematic rendering out of individual audio layers. This proved to be a time-consuming process, as each pass has to be done in real time. Each layer was rehearsed and performed allowing for a musical response to each layer. Initially after trying to save these multichannel audio files in MaxMSP, the most crash-proof method was bussing the output of MaxMSP into Logic Pro using Soundflower. For the purposes of time synchronicity I added a very short click at the start of each sample so they could be precisely time aligned within Logic Pro.

At this time I explored similar kinds of spatial transformations Mace Francis’ When Traffic Rises (2012), Stuart James’ Additive Recurrence (2012) and Erin Coates and Stuart James’ Merge (2013).

Once this corpus of sound had been derived, it was possible to view the scope of this sound world. Considering the corpus as a collective, it was necessary to decide on a structural arrangement of these different sounds. The audio files were imported into Logic Pro and arranged on the timeline allowing for immediate audition and evaluation of the structure. These audio layers were subsequently mixed with some level of automation.

In the almost 18 months between the writing of this piece and the explorations made in Particle 1, significant software development and investigation into performative approaches of timbre spatialisation was undertaken using Model A. This investigation involved the exploration of various kinds of terrain and trajectory combinations, and later explorations tended to favour the use of circular trajectories, rather than the linear trajectory used in Particle 1, allowing for a more perceptible correlation between the Wave Terrain Synthesis and timbre spatialisation processes. Nevertheless there remained some inherent flaws. One of these was that the highest frequency bins of one spectral frame naturally would spill into the lowest frequency bins of the next, creating an effect very reminiscent of the Shepard tones: an infinitely ascending or descending spectrum. Although this can be a desirable effect, it is not indicative of the features presented in the terrain or trajectory structures, and therefore does not promote the concept of a tight and intuitive semantic relationship between this control structure and the sound shape generated. The question ultimately boiled down to aesthetic interpretations, and whether the terrain could be a visual indicator for timbre spread across space and affect the spectral qualities generated across space. Early approaches to Model B take into consideration that local colours of the terrain surface affect the audible colour spectrum of their nearest speaker.

Although the earlier pieces utilise the mapping strategy in Model A, it was a visit to the ICMC in Slovenia in 2012, and preparation for a lecture I gave at the Naples Conservatory of Music that ultimately led me to pursue the histogram method. Just short of these new developments, I had the opportunity to test the software at the SpADE facility at DMARC. Here it was possible to examine the effectiveness of timbre spatialisation using the WTS control strategy over a 16- and 32-channel loudspeaker configuration. It was with this setup that the detail of spatialisation and immersiveness became apparent. Up until this time, most of the source sounds I used for testing purposes were restricted to static oscillators and noise generators. This was because I was interested in establishing various archetypes of sound shapes possible, as well as exploring the morphology of these shapes by manipulating the terrain and trajectory structures. Navigating between various immersive states could be controlled easily and effectively as I began to explore interpolating between different trajectory states as a means of morphing the sound shapes generated.

Although the sounds spatialised in the lab were invariably mono sources, the results were evolving, engaging and immersive environments. These experiments involved varying degrees of random distribution of spectra across SpADE’s 32-channel speaker configuration while experimenting with natural found sounds I had recorded. Timbre spatialisation appears to be extremely effective in a 16-channel environment for creating immersion, despite being an artificial and abstract recomposition of an auditory scene. Normandeau (2009) describes this phenomenon as “specific to the acousmatic medium is its virtuality: the sound and the projection source are not linked” (p. 278).

Normandeau (2009) also states that timbre spatialisation recombines the entire spectrum of a sound virtually in the space of the concert hall, and is therefore not a conception of space that is added at the end of the composition process, an approach frequently seen, but a truly composed spatialisation. Although the structure and basic mix for Veden Ja Tulen Elementit were in progress, I was considering the kinds of spatial treatments I would apply to specific layers of the piece. As I had already heard some of these sound shapes generated at DMARC, it was not so difficult to imagine these effects when applied to the sounds generated.

The piece explores these immersive spatial attributes—envelopment, engulfment, presence and spatial clarity using a variety of movements from slow circular correlated motion, sound slowly unfolding through space and slow fractal dispersion to fast chaotic movement and the random dispersion of sound. I found fusing standard point source approaches worked well against the more complex, evolving and immersive sound shapes generated through timbre spatialisation. This allowed the spatialisation to have momentary points of focus, influenced by the multi-levelled space forms and listener zones discussed by Smalley (2007). In this way the timbre spatialisation generates a circumspectral and immersive sound scene, from which other sound shapes and point source sounds emerge within. Complex sound shapes were also created using fractal terrains and trajectory curves, creating some very abstract and unpredictable sound shapes resulting in low- and high-frequency spatial texture. This asynchronous quality of trajectory curves produced irregular evolving and fluttering movements in the resultant sound shape. The Rössler strange attractor, a continuous differential equation, was particularly effective in exhibiting these qualities.

It was necessary to categorically list and separate these different audio files in order to decide on the spatial treatment appropriate for each sound. These were grouped into five distinct treatments. This table lists coherent sound shapes down to the most inherent and noisy of sound shapes respectively. For example, the original stream sample is spatialised using an incoherent noisy distribution, giving the sound an immersive and non-directional quality; however the descending water samples towards the end of the piece are treated with the first category, slow rotation of linear ramp function with a circular synchronised trajectory. This particular combination was effective in generating the slow rotational effects applied.

Multichannel spectral analysis performed by the Flux Pure Analyzer Essential application (with the multichannel option) show a graphical representation of this spiral- like formation generated after applying the timbre spatialisation.

The final stage of this work required a systematic rendering out of individual audio layers. This proved to be a time-consuming process, as each pass has to be done in real time. Each layer was rehearsed and performed allowing for a musical response to each layer. Initially after trying to save these multichannel audio files in MaxMSP, the most crash-proof method was bussing the output of MaxMSP into Logic Pro using Soundflower. For the purposes of time synchronicity I added a very short click at the start of each sample so they could be precisely time aligned within Logic Pro.

At this time I explored similar kinds of spatial transformations Mace Francis’ When Traffic Rises (2012), Stuart James’ Additive Recurrence (2012) and Erin Coates and Stuart James’ Merge (2013).

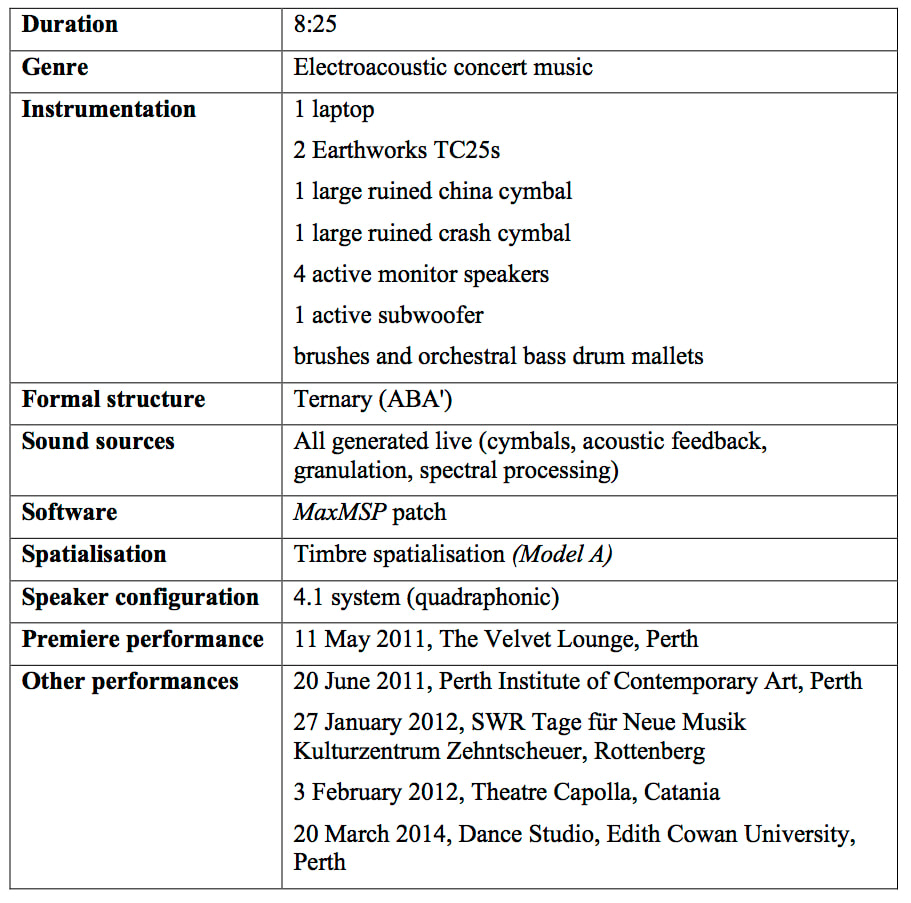

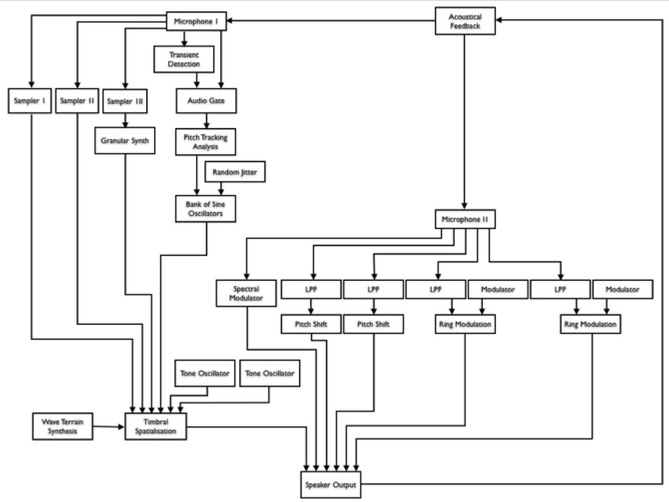

Particle I (2011)

For 2 ruined cymbals and live electronics (quadraphonic)

|

This piece is the first in a series of my works that explore the use of WTS as a means of controlling timbre spatialisation. Particle 1 began as an electroacoustic experiment at home with a condenser microphone, a ruined cymbal and a KV2 active PA speaker. The work was premiered at The Velvet Lounge, Perth, and also featured in a concert by ensemble Decibel at the Perth Institute of Contemporary Arts (PICA). The work was performed on tour in Europe with ensemble Decibel in January 2012 and was recorded and broadcast on Southwest German Radio.

The cymbal is an instrument rich in harmonics, and is often used for timbral and dramatic embellishment in musical works. This work features the ruined cymbals as sound sources for live laptop processing, ultimately aimed at creating an immersive spectral sound world. Certain on-and-off-axis microphone placements around the cymbals create a varied palette of sound colour, which the laptop processing further develops by granularisation, spectral modulations, and timbre spatialisation.

One of the main questions that arose from writing this piece was the role of sound diffusion in live performance. In many spatial music performances it is quite common for the laptop performer to be largely responsible only for diffusing the sounds, rather than creating, modifying and spatialising these simultaneously. In the case of this work, the priority was to control the sound sources and the way in which these sounds were manipulated, rather than controlling the intricacies of spatialisation, which was instead reliant on an automated system. This was a conscious choice based on what was logistically feasible given I was not only playing the cymbals with mallets, but was also performing the sound synthesis and processing of these sounds, and managing the live diffusion of these sounds. |

The technical setup on stage involves two microphones and two ruined cymbals, as well as the live processing rig (laptop and audio interface), and a quadraphonic speaker configuration and subwoofer placed around the audience.

The technical setup involves a specific microphone of choice, the Earthworks TC25 condenser. There are a number of reasons for this. The first is the polar pattern. Omnidirectional microphones are often used for measurement and room analysis purposes due to their linear frequency response. The frequency response is also characteristically much more extended than other condenser microphones having a range of 9 Hz to 25 KHz meaning that the microphone is largely linear within the range of human auditory hearing. The dips in the frequency response only exist at the extremities of the microphone’s own frequency response below 20 Hz and towards 20 KHz.

The microphone is also physically quite compact, most particularly the capsule. It is characteristic of pencil condensers to have a smaller capsule size, but the Earthworks microphone has an uncharacteristically small diaphragm. This allowed the microphones to be placed very close to the source, and not suffer from the proximity effect, resulting in timbral differences due to close proximity with the source as is experienced with directional microphones.

One of the Earthworks TC25s is placed parallel to a ruined china cymbal. From this position an 18-inch ruined crash cymbal is situated so that its edge also meets perpendicular to the microphone’s capsule. This allows the one microphone to pick up the fundamental pitch of both cymbals. A second TC25 is used as an overhead situated perpendicular to the china cymbal. This second microphone is responsible for picking up detail of the various soft mallets and brushes used to strike the cymbals in the piece.

The starting point of this piece was to find a sound object that had a broad spectral content, as Barreiro (2010) claimed that these kinds of sounds were more suitable for spectral spatialisation and resulted in more diffused and enveloping sound shapes. The cymbal was amplified through the PA speaker in order to reproduce its fundamental frequency. The work evolved by initially working with the acoustic feedback and modulating the live microphone signal to control the nature of the way in which this feedback evolved. As the work progressed the processing shifted not only to sampling and spectrally processing the sound of the cymbals, but also focusing on the nature of the spatialisation applied.

This work depends on sound sources to initiate a chain of events. In this sense the work is reactive, and the sounds made on the cymbals, using either soft mallets or brushes, excite the system into feedback. In this way, the cymbals, the microphones, the loudspeakers and the room are all part of this instrument.

Acoustic feedback was tempered by consistently pitch shifting and ring modulating the sound source. The spatialisation also served to destabilise resulting feedback by introducing time delays and level differences in relation to the microphones. A significant level of granulation and spectral processing allowed for the sound source to be sufficiently ‘abstracted’ that there was no risk of further feedback.

This thorough testing process was critical for the software design. The first performance of this work was in a pub venue, The Velvet Lounge at Mt Lawley, with a ceiling-mounted PA system and a very small stage. Due to the close proximity of the PA system to the performance stage, the risk of unwanted feedback was considerable. This was perhaps the most volatile of conditions under which to test such a subtle and condition-dependent work; however the extensive testing proved to have aided in maintaining a controlled performance. The following performance with ensemble Decibel at PICA was considerably more polished, involving a quadraphonic speaker configuration instead of the two-speaker PA system at the Velvet Room.

The technical setup involves a specific microphone of choice, the Earthworks TC25 condenser. There are a number of reasons for this. The first is the polar pattern. Omnidirectional microphones are often used for measurement and room analysis purposes due to their linear frequency response. The frequency response is also characteristically much more extended than other condenser microphones having a range of 9 Hz to 25 KHz meaning that the microphone is largely linear within the range of human auditory hearing. The dips in the frequency response only exist at the extremities of the microphone’s own frequency response below 20 Hz and towards 20 KHz.

The microphone is also physically quite compact, most particularly the capsule. It is characteristic of pencil condensers to have a smaller capsule size, but the Earthworks microphone has an uncharacteristically small diaphragm. This allowed the microphones to be placed very close to the source, and not suffer from the proximity effect, resulting in timbral differences due to close proximity with the source as is experienced with directional microphones.

One of the Earthworks TC25s is placed parallel to a ruined china cymbal. From this position an 18-inch ruined crash cymbal is situated so that its edge also meets perpendicular to the microphone’s capsule. This allows the one microphone to pick up the fundamental pitch of both cymbals. A second TC25 is used as an overhead situated perpendicular to the china cymbal. This second microphone is responsible for picking up detail of the various soft mallets and brushes used to strike the cymbals in the piece.

The starting point of this piece was to find a sound object that had a broad spectral content, as Barreiro (2010) claimed that these kinds of sounds were more suitable for spectral spatialisation and resulted in more diffused and enveloping sound shapes. The cymbal was amplified through the PA speaker in order to reproduce its fundamental frequency. The work evolved by initially working with the acoustic feedback and modulating the live microphone signal to control the nature of the way in which this feedback evolved. As the work progressed the processing shifted not only to sampling and spectrally processing the sound of the cymbals, but also focusing on the nature of the spatialisation applied.

This work depends on sound sources to initiate a chain of events. In this sense the work is reactive, and the sounds made on the cymbals, using either soft mallets or brushes, excite the system into feedback. In this way, the cymbals, the microphones, the loudspeakers and the room are all part of this instrument.

Acoustic feedback was tempered by consistently pitch shifting and ring modulating the sound source. The spatialisation also served to destabilise resulting feedback by introducing time delays and level differences in relation to the microphones. A significant level of granulation and spectral processing allowed for the sound source to be sufficiently ‘abstracted’ that there was no risk of further feedback.

This thorough testing process was critical for the software design. The first performance of this work was in a pub venue, The Velvet Lounge at Mt Lawley, with a ceiling-mounted PA system and a very small stage. Due to the close proximity of the PA system to the performance stage, the risk of unwanted feedback was considerable. This was perhaps the most volatile of conditions under which to test such a subtle and condition-dependent work; however the extensive testing proved to have aided in maintaining a controlled performance. The following performance with ensemble Decibel at PICA was considerably more polished, involving a quadraphonic speaker configuration instead of the two-speaker PA system at the Velvet Room.

The sound synthesis in this work explores a number of treatments of the cymbal sound source from live sampling and granulation, to ring modulation, pitch shifting, sine tone resynthesis and a vocoder-like spectral processing that is also used to excite the acoustical space, invoking momentary feedback through the system. A schematic of the signal process and flow is shown in the diagram above.

In this early timbre spatialisation experiment there was as much curiosity about the possible resulting sound shapes that could be generated as there was about the implementation itself. This was due to the early and experimental nature of the mapping strategy used.

The work itself did not explore any means of physically performing the diffusion in real time, relying instead on an automated system that was driven by LFO. This allowed the electronics performer to focus on the timbral qualities of the sounds themselves and the sound-generating means, rather than specifically controlling the nature of the way in which the diffusion evolves.

The trajectory used was linear, passing over a stochastically generated polar terrain surface. The trajectory sweeps in one direction, as dictated by the low-frequency oscillator (LFO), before swinging back in the opposite direction et cetera. The only control by the performer is the speed of this LFO. In the work this oscillation starts at 0.02 Hz. This can be heard in Section A of the work with the processed sound of the brushes over the china cymbal creating low-frequency spatial texture as the sounds shift slowly over the quadraphonic speaker array. The speed of this LFO is increased to over 10 Hz in Section B when the crash cymbal triggers off a bank of sine tones. These sine tones are processed with timbre spatialisation creating a movement that is between low- and high-frequency spatial texture. It is on the cusp of being perceived as a fused image but the micro-morphologies are still evident, creating a fast, shimmering effect across the listener area. This faster movement tended to coalesce in a more immersive and enveloping spatial distribution.

In this early timbre spatialisation experiment there was as much curiosity about the possible resulting sound shapes that could be generated as there was about the implementation itself. This was due to the early and experimental nature of the mapping strategy used.

The work itself did not explore any means of physically performing the diffusion in real time, relying instead on an automated system that was driven by LFO. This allowed the electronics performer to focus on the timbral qualities of the sounds themselves and the sound-generating means, rather than specifically controlling the nature of the way in which the diffusion evolves.

The trajectory used was linear, passing over a stochastically generated polar terrain surface. The trajectory sweeps in one direction, as dictated by the low-frequency oscillator (LFO), before swinging back in the opposite direction et cetera. The only control by the performer is the speed of this LFO. In the work this oscillation starts at 0.02 Hz. This can be heard in Section A of the work with the processed sound of the brushes over the china cymbal creating low-frequency spatial texture as the sounds shift slowly over the quadraphonic speaker array. The speed of this LFO is increased to over 10 Hz in Section B when the crash cymbal triggers off a bank of sine tones. These sine tones are processed with timbre spatialisation creating a movement that is between low- and high-frequency spatial texture. It is on the cusp of being perceived as a fused image but the micro-morphologies are still evident, creating a fast, shimmering effect across the listener area. This faster movement tended to coalesce in a more immersive and enveloping spatial distribution.

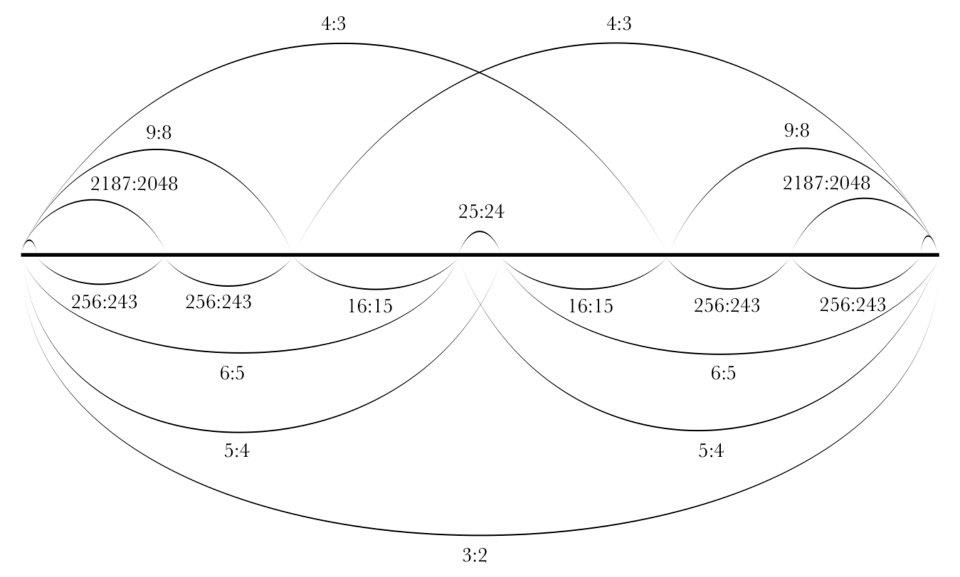

想 (xiǎng) (2003)

|

Of the few surviving ancient theoretical documents on music, it seems that many of the systems were based on the idea of dividing a string or a resonating tube at various points with respect to the fundamental harmonic relationship of the octave. With exception to the unison, this interval has the simplest of all harmonic ratios, being 2:1. However, it seems that for the ancient Chinese systems, and the origin of pentatonic scales, the respective importance of the octave is somewhat diminished. Alternatively, the fundamental relationship of the pure fifth (3:2) is used to construct harmonic (intervallic) relationships. This piece uses the pure fifth as a primary fundamental (3:2), which is divided to form a pitch series formed through the symmetrical division of intervals by just intonation. Essentially this score varies between a textural, harmonic, and/or spectral structure. Most segments of the score have been realised within OpenMusic (Mac OS 9), which were then subsequently arranged within the Blue Csound compositional frontend tool (Windows XP). From here an audio render was obtained via the WinSound application (Windows XP).

|

The Cat's Eye Nebula (4-channel) (2003)

The Abyss (2003)

This work featured no-input mixing, before I even knew that Toshimaru Nakamura had codified the technique within a couple of years prior. I discovered the process independently after I had been exploring preamp distortion through the mixer using positive feedback. It was during this exploration that I discovered that I did not even need a sound source inputted into the mixer in order to generate sound, but the mixer could generate sound of its own accord. I happened to be obsessed with dynamical systems theory and feedback as concepts, and was experimenting with this both in the analog and digital domains. I also happened to be exploring dynamical wave terrain synthesis also at the time, and as I was living with sound artist Alan Lamb, we would have regular discussions on entropy and feedback.

Inside a Box (2002)

Like the previous soundscape pieces, Inside a Box is concerned with the manipulation of audio; although this is not so much the core of the composition itself. Like in The Realisation…, Inside a Box follows a more profound inner meaning. The Box is a metaphor that works on many different levels. Besides the obvious that this piece was generated “inside a box” (or a computer), this piece represents a box as the restraints of ones own inner self, mind, body, and spirit, and the perceived bounds of existing within the systems of the state. The piece has been scored out entirely in the C programming language, and compiled using the opcodes within the canonical version of CSound. The piece explores many of the FFT based functions unique only to a handful of programmable software synthesizers. These include FOF and FOG synthesis, additive synthesis/resynthesis, STFT resynthesis, LPC (Linear Predictive Coding) resynthesis, convolution and cross-synthesis, some audio cut-up techniques, and some other features used in the processing of audio for spacialisation purposes (namely sound localisation and HRTF features). Software used in the creation of this piece include CSound canonical 4.23, WinSound 4.23, Hydra 1.1, CSound Heterodyne, Phase Vocoding & Linear Predictive Coding Utilities, and Sound Forge 6.0.

The Realisation that was Everything and that Nothing Ever Was (2001)

Whilst the composer was in the midst of a personal crisis and mental health issues, the work plays with the divide between external reality and internal subjective reality (and the liminal space between reality and imagination). By working with referential sound sources, reproducing these in a way that is believable, the work gradually pushes this envelope to see how far this "truth" can be modified before the listener decides it is false. The work points to aspects of soundscape practice, hyper-reality, and some of the cultural dilemmas that concern 'perception of truth' to emerge many years later such as fake news and deep fakes.

In addition to this, other sound sources, such as insect samples are heavily detuned several octaves - the idea at the time was that if we could hear/perceive the music of the animal kingdom, would we be closer to truth than the lies we are subjected to by our own kind? As a child, I had often thought that smaller creatures from the animal kingdom experienced time at a different rate to homo sapiens. Detuning the insect chorales somehow made me feel like I could normalise that perceived time difference, in a way so that we could also experience more closely their own music in the way they themselves would hear it.

Philip Samartzis curated this work amongst others to be played in the USA back in 2002 I think? I can't seem to find the details anymore.

In addition to this, other sound sources, such as insect samples are heavily detuned several octaves - the idea at the time was that if we could hear/perceive the music of the animal kingdom, would we be closer to truth than the lies we are subjected to by our own kind? As a child, I had often thought that smaller creatures from the animal kingdom experienced time at a different rate to homo sapiens. Detuning the insect chorales somehow made me feel like I could normalise that perceived time difference, in a way so that we could also experience more closely their own music in the way they themselves would hear it.

Philip Samartzis curated this work amongst others to be played in the USA back in 2002 I think? I can't seem to find the details anymore.

Terminal Voltage (2000)

- Stelarc, Vouris, P. James, S. & Nixon-Lloyd, A. (2023). The Anthropomorphic Machine.

- Devenish, L. & James, S. (2023). Liquidities

- James, S. (2021). Entropic States for 16.1 surround loudspeaker array.

- Coates, E. & James, S. (2020). [Alluvial Gold Installation]

- James, S. (2020). counterpoise for flute, bass clarinet, viola, cello, chaotic oscillator and circuit bent toy (commissioned by Decibel New Music Ensemble as part of their 2 Minutes from Home project).

- Devenish, L. & James, S. (2019). Percipience: After Kaul for 3 Overtone Triangles and electronics.

- James, S. (2017). Noise in the Clouds for Laptop Soloist, Sextet (3 acoustic duos), Fixed Media and Live Electronics (stereo), and Live Generated Visuals.

- James, S. (2016). Particle III for solo laptop and 16.1 surround loudspeaker array.

- James, S. (2016). Intervolver for 8 laptops and 8 loudspeakers (commissioned by the Western Australian Laptop Orchestra (WALO)).

- James, S. (2016). Operation Fortitude for 8 laptops and 8 loudspeakers (commissioned by the Western Australian Laptop Orchestra (WALO)).

- Hope, C. & James, S. (2015). Feather for 24.4 surround sound (3D Ambisonic).

- James, S. (2013). The Overview Effect for Laptop (24.4 channel).

- James, S. (2012). Additive Recurrence for Laptop and Live Feedback.

- James, S. (2012). Veden Ja Tullen Elementit (8-channel)

- James, S. (2011). Particle I for Laptop and 2 Ruined Cymbals (4-channel).

- James, S. (2003). 想 (xiǎng).

- James, S. (2003). The Cat's Eye Nebula (4-channel).

- James, S. (2003). The Abyss for laptop and no-input mixer (4-channel).

- James, S. (2002). Inside a Box.

- James, S. (2001). The Realisation that was Everything and that Nothing Ever Was.

- James, S. (2000). Terminal Voltage.